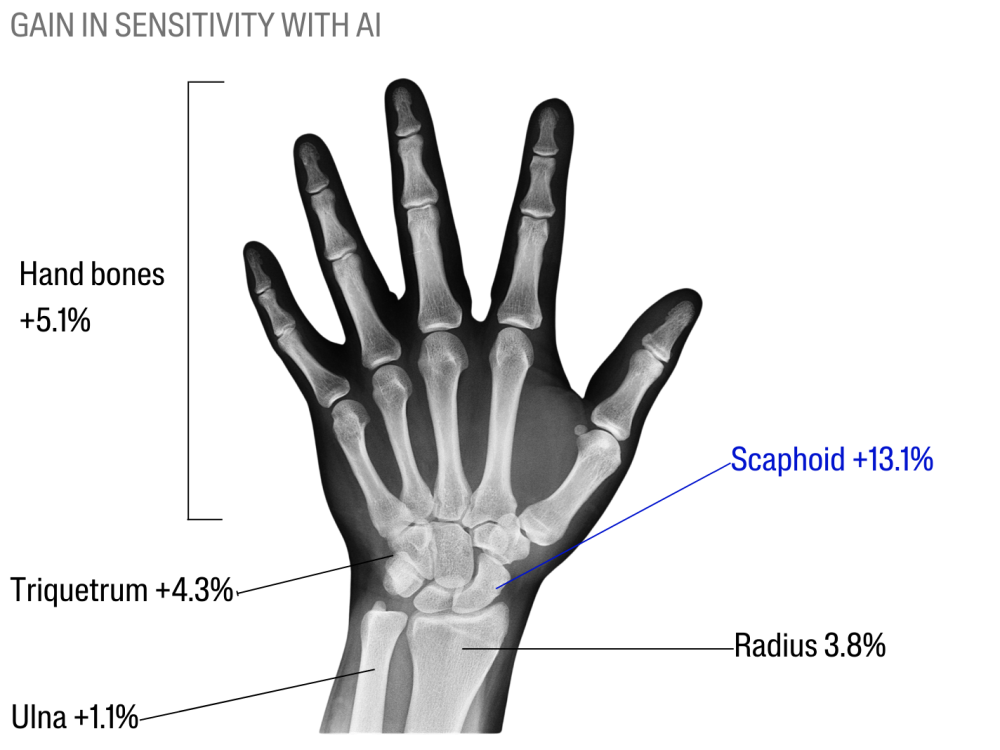

Commercially-available AI algorithm improves radiologists’ sensitivity for wrist and hand fracture detection on X-ray, compared to a CT-based ground truth

Objectives

Algorithms for fracture detection are spreading in clinical practice, but the use of X-ray-only ground truth can induce bias in their evaluation. This study assessed radiologists’ performances to detect wrist and hand fractures on radiographs, using a commercially-available algorithm, compared to a computerized tomography (CT) ground truth.

Methods

Post-traumatic hand and wrist CT and concomitant X-ray examinations were retrospectively gathered. Radiographs were labeled based on CT findings. The dataset was composed of 296 consecutive cases: 118 normal (39.9%), 178 pathological (60.1%) with a total of 267 fractures visible in CT. Twenty-three radiologists with various levels of experience reviewed all radiographs without AI, then using it, blinded towards CT results.

Results

Using AI improved radiologists’ sensitivity (Se, 0.658 to 0.703, p < 0.0001) and negative predictive value (NPV, 0.585 to 0.618, p < 0.0001), without affecting their specificity (Sp, 0.885 vs 0.891, p = 0.91) or positive predictive value (PPV, 0.887 vs 0.899, p = 0.08). On the radiographic dataset, based on the CT ground truth, stand-alone AI performances were 0.771 (Se), 0.898 (Sp), 0.684 (NPV), 0.915 (PPV), and 0.764 (AUROC) which were lower than previously reported, suggesting a potential underestimation of the number of missed fractures in the AI literature.

Conclusions

AI enabled radiologists to improve their sensitivity and negative predictive value for wrist and hand fracture detection on radiographs, without affecting their specificity or positive predictive value, compared to a CT-based ground truth. Using CT as gold standard for X-ray labels is innovative, leading to algorithm performance poorer than reported elsewhere, but probably closer to clinical reality.